Table Of Contents

CloudBased Machine Learning Platforms

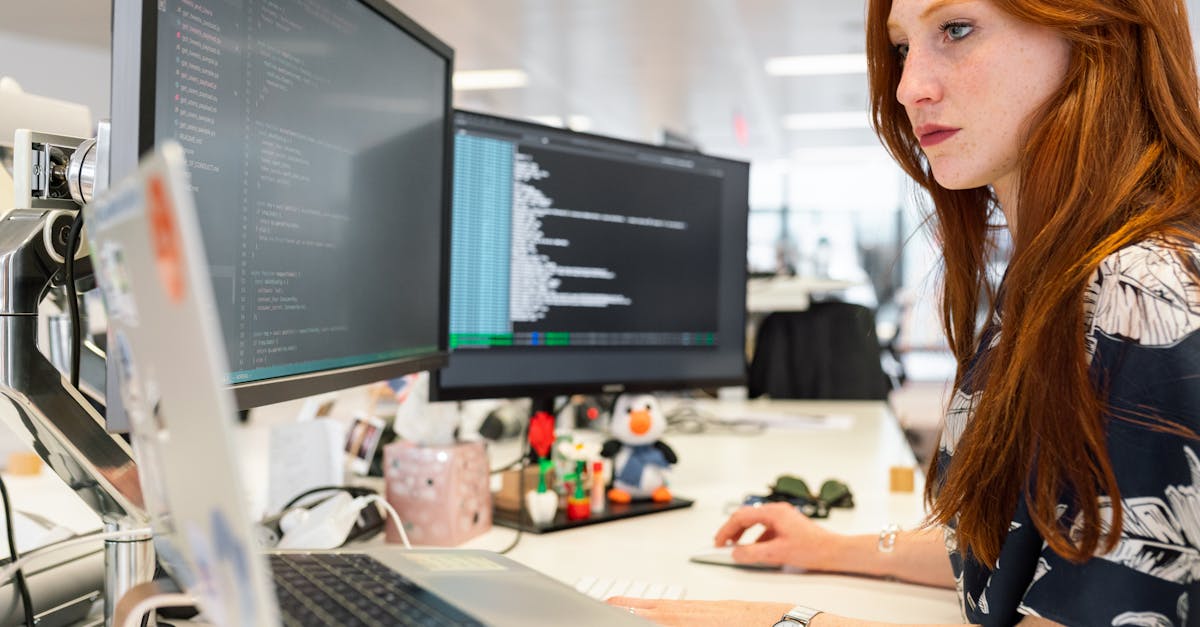

Cloud-based machine learning platforms have revolutionised the way data analysts approach their work. These platforms provide scalable computing power and facilitate collaboration among teams, making it easier to develop, test, and deploy machine learning models. Users can access vast libraries of pre-built algorithms and tools, streamlining the process of building predictive models. This accessibility encourages innovation, allowing analysts to experiment with different techniques without the need for extensive local resources.

Additionally, these platforms often integrate smoothly with existing data storage and processing systems, enhancing data accessibility. Features like Analytics and Reporting allow analysts to visualise their findings in real-time, ensuring that insights are easily communicated across various stakeholders. The ability to monitor and adjust models dynamically ensures that data-driven decisions can be made swiftly, further emphasising the advantages of cloud-based solutions for analytics professionals.

Advantages of Using Cloud Services

Cloud-based machine learning platforms provide significant advantages for data analysts, particularly in terms of scalability and flexibility. Analysts can quickly access vast computing resources without the need for substantial local hardware investments. This makes it easier to handle large datasets and complex algorithms, enabling teams to focus on their analysis rather than managing infrastructure.

Another key benefit lies in the integration capabilities of cloud services. They often come with built-in tools for Analytics and Reporting, allowing users to seamlessly visualise data and share insights. This ensures that analysis results can be quickly communicated to stakeholders, supporting informed decision-making across the organisation. Such tools also facilitate collaboration among team members, regardless of their physical location.

Feature Engineering Techniques

Feature engineering is a critical step in the data analysis process, as it significantly influences the effectiveness of machine learning models. Analysts transform raw data into features that better represent the underlying problem, leading to improved predictive performance. Techniques such as normalization, encoding categorical variables, and creating interaction terms can enhance the model's ability to learn complex relationships. Further, domain knowledge plays an essential role in identifying which features are most relevant, ensuring the model captures the nuances of the data.

In the context of analytics and reporting, effective feature engineering can illuminate valuable insights from large datasets. By selecting or constructing the right features, analysts can streamline their workflows, making it easier to communicate results and trends. Techniques like dimensionality reduction help maintain a manageable number of variables while preserving essential information. This approach not only boosts model accuracy but also enhances the clarity of visualisations and reports, enabling stakeholders to make informed decisions based on solid analytical foundations.

Improving Model Performance through Feature Selection

Feature selection plays a crucial role in enhancing model performance by identifying the most relevant features that contribute to the predictive accuracy. This process involves evaluating various attributes and eliminating those that do not provide significant information. By focusing on essential features, data analysts can simplify their models, reduce overfitting, and improve interpretability. Leveraging appropriate tools that assist in feature selection can facilitate this process, allowing analysts to uncover patterns that may not be evident in a more complex dataset.

Moreover, effective feature selection not only streamlines the modelling process but also plays a significant role in the analytics and reporting phase. The clearer, more concise datasets lead to more understandable reports, which can drive better decision-making. Analysts can present insights more effectively when the data is relevant and manageable. This ultimately enhances both the accuracy of models and the quality of insights derived, enabling organisations to respond more agilely to emerging trends and challenges.

Model Evaluation and Validation

Model evaluation and validation are critical steps in the machine learning pipeline. These processes ensure that models generalise well to unseen data and perform consistently in various scenarios. A comprehensive evaluation often involves splitting the dataset into training and testing sets. Various metrics, such as accuracy, precision, recall, and F1 score, are used to quantify performance and identify potential improvements.

Moreover, effective analytics and reporting play a vital role in communicating the results of model evaluations. Visual tools, like confusion matrices and ROC curves, provide clear insights into the model's performance and areas where it might falter. This information becomes invaluable for analysts and stakeholders when making informed decisions about model deployment and further refinements.

Techniques for Assessing Model Accuracy

Assessing model accuracy is a crucial step in the machine learning process, ensuring that models perform effectively on unseen data. A variety of techniques exist for evaluating model performance, with metrics such as accuracy, precision, recall, and F1 score commonly used. Each of these metrics provides insights into different aspects of model prediction capabilities. Analysts should carefully select metrics that align with their specific objectives and the nature of their data, as this choice can significantly impact the perceived effectiveness of the model.

Visualisation techniques also play a key role in model evaluation. Confusion matrices offer a clear representation of true versus predicted classifications, allowing data analysts to identify strengths and weaknesses in their model. Analytics and Reporting tools can enhance this process by automating visual outputs, making it easier to communicate findings to stakeholders. Through thorough evaluation and a robust understanding of various assessment techniques, analysts can better refine models and improve their overall predictive accuracy.

FAQS

What are some popular cloud-based machine learning platforms available for data analysts?

Some popular cloud-based machine learning platforms include Google Cloud AI, Amazon SageMaker, Microsoft Azure Machine Learning, and IBM Watson Studio. These platforms offer various tools and resources for building and deploying machine learning models.

What are the advantages of using cloud services for machine learning?

The advantages of using cloud services for machine learning include scalability, cost-effectiveness, access to powerful computational resources, and the ability to collaborate easily with teams. Cloud services also provide pre-built algorithms and automated machine learning tools that can speed up the development process.

What is feature engineering and why is it important for machine learning models?

Feature engineering is the process of selecting, modifying, or creating new features from raw data to improve the performance of machine learning models. It is important because well-engineered features can help models better capture the underlying patterns in the data, leading to improved accuracy and predictive power.

How can feature selection improve the performance of a machine learning model?

Feature selection can improve model performance by reducing overfitting, decreasing training time, and enhancing model interpretability. By identifying and using only the most relevant features, data analysts can create simpler models that generalise better to unseen data.

What techniques are used for evaluating and validating machine learning models?

Common techniques for evaluating and validating machine learning models include cross-validation, confusion matrices, ROC-AUC curves, and precision-recall metrics. These methods help assess model accuracy and determine how well the model is likely to perform on new, unseen data.